How to download an entire website from Google Cache?

If the website was recently deleted, but the Wayback Machine didn't save the latest version, what you can do to get the content? Google Cache will help you to do this. All you need is to install this plugin - https://www.downthemall.net/

1 - Install DownThemall plugin to your browser.

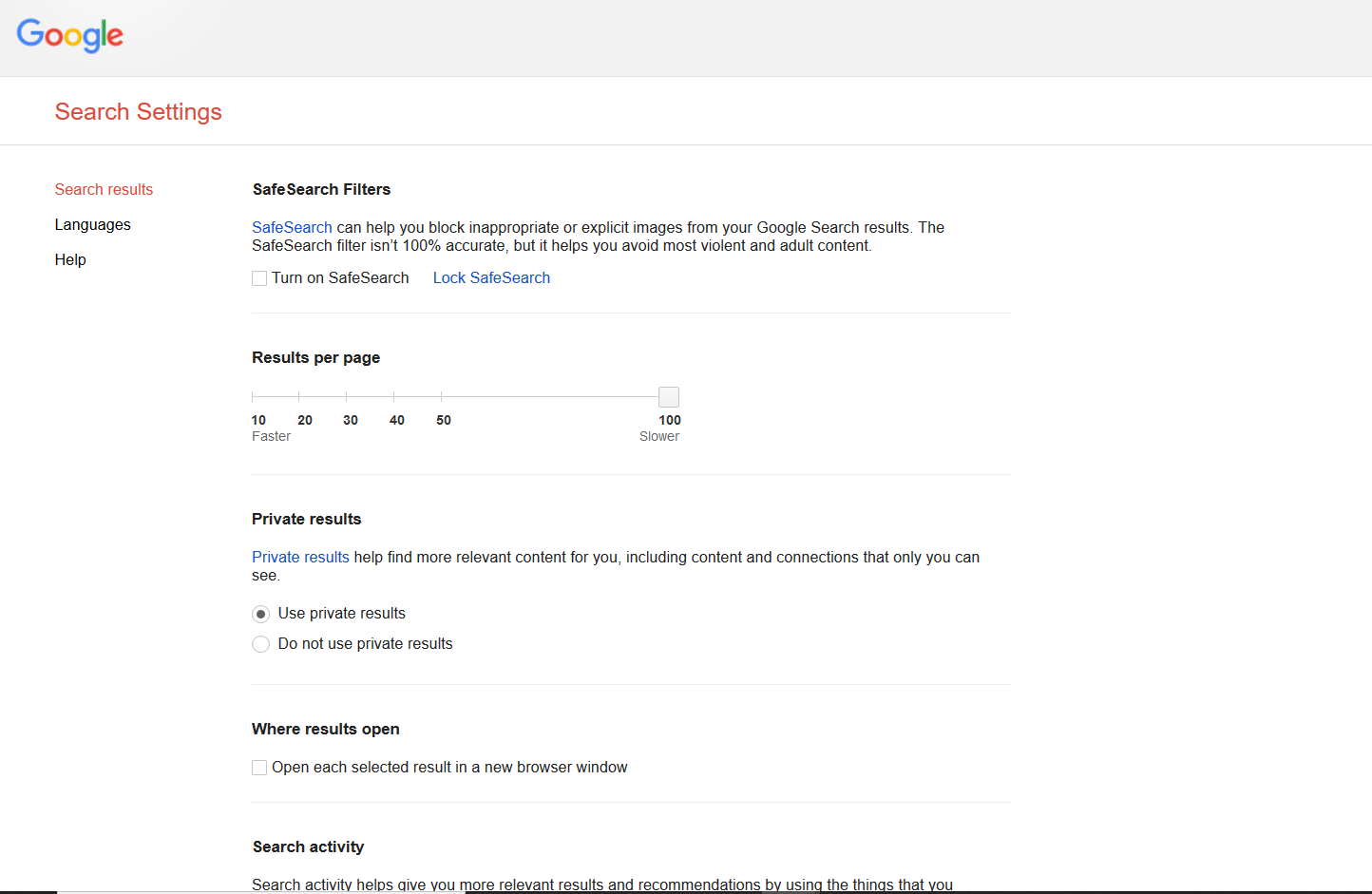

2 - Open Google Search in the browser and set "100 Results per page" in "Settings" - "Search Settings" menu. It will give you more downloadable cache pages per one click. Unfortunately 100 results are maximum in Google search:

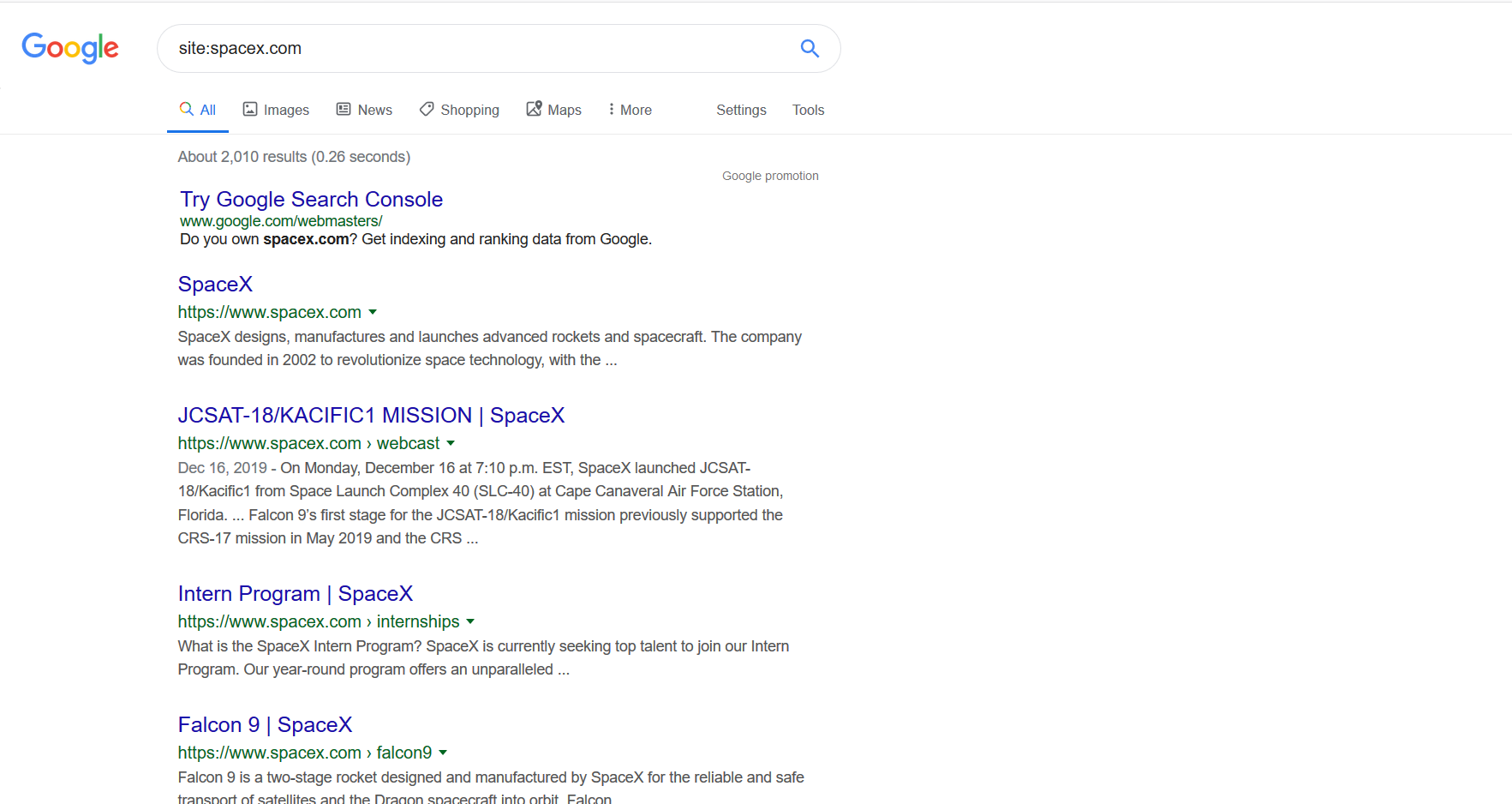

3 - Find on Google all cached pages of your site. Just enter in search field this: site:yourwebsite.com. Here is an example with spacex.com:

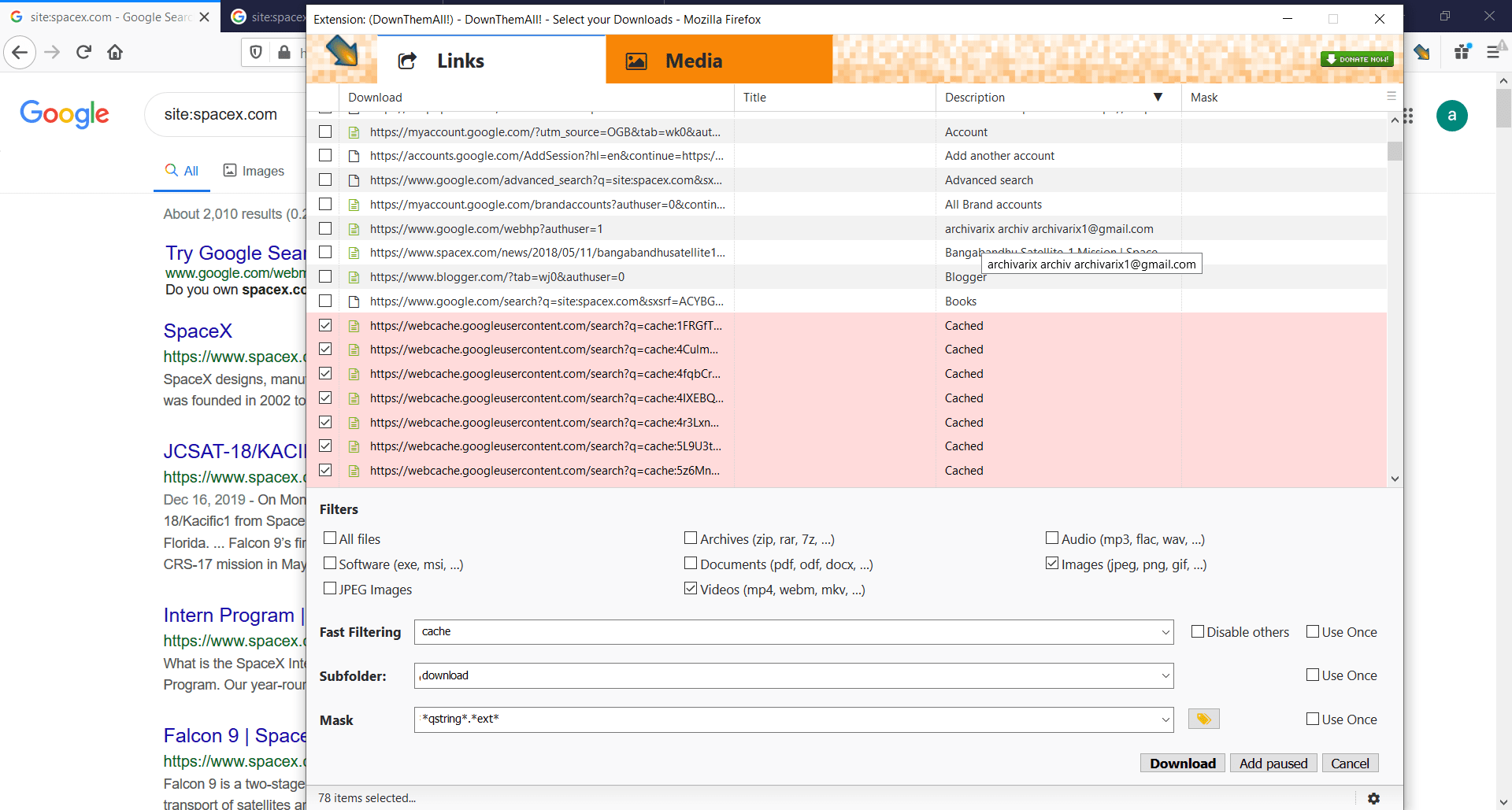

4 - In DownThemall plugin enter cache in "Fast Filtering" field. This regular expression will choose all of cached pages. Press Download button and wait for... download error.

5 - After 100 or more downloaded files Google will interrupt the process and ask you to verify yourself via captcha. DownThemall plugin can't solve captcha, it just stops downloading. So you need to return to Google search, open any search result, solve the captcha manually and restart the download process. It will give you next batch of files to download.

As you see the process is not fully automated but it is quite fast, and completely free. If you want to scrape thousands and millions cached pages the better way to buy some SEO tool with "google cache scraper" option.

The use of article materials is allowed only if the link to the source is posted: https://archivarix.com/en/blog/download-website-google-cache/

Dear Archivarix users, Congratulations on the upcoming holidays and thank you for choosing our service to archive and restore your websites!…

It's that special time when we take a moment to reflect not just on our achievements, but also on the incredible journey we've shared with you. This year, Archivarix celebrates its 6th anniversary, an…

On Feb 1st 2023 our prices will change. Activate the promo-code and get a huge bonus in advance.…

Discounts from Archivarix on Black Friday and Cyber Monday.…

It has been four years since we made the Archivarix service public on September 29, 2017. Users make thousands of restorations every day. The number of servers that distribute downloads and processing…

Sometimes our users ask why the website was not fully restored? Why the website doesn't it work the way I would like it to? Known issues when restoring sites from archive.org.…

Two big tasty coupons are valid from Friday 27.11.2020 to Monday 30.11.2020. Each of them gives a balance bonus in the form of 20% or 50% of the amount of your last or new payment.…

Three years ago, on September 29, 2017, our archive.org downloader service was launched. All these 3 years we have been continuously developing, we have created our own CMS, a Wordpress plugin, a syst…

Wayback Machine ( web.archive.org ) Alternative. Internet archive search engine. Find archived copies of websites. Data from 1996. Full-text search.

In the near future, our team plans to launch a uni…

How to generate meta name="description" on all pages of a website? How to make the site work not from the root, but from a subdirectory?…

- Full localization of Archivarix CMS into 13 languages (English, Spanish, Italian, German, French, Portuguese, Polish, Turkish, Japanese, Chinese, Russian, Ukrainian, Belarusian).

- Export all current site data to a zip archive to save a backup or transfer to another site.

- Show and remove broken zip archives in import tools.

- PHP version check during installation.

- Information for installing CMS on a server with NGINX + PHP-FPM.

- In the search, when the expert mode is on, the date/time of the page and a link to its copy in the WebArchive are displayed.

- Improvements to the user interface.

- Code optimization.

If you are a native speaker of a language into which our CMS has not yet been translated, then we invite you to make our product even better. Via Crowdin service you can apply and become our official translator into new languages.

- CLI support to deploy websites right from a command line, imports, settings, stats, history purge and system update.

- Support for password_hash() encrypted passwords that can be used in CLI.

- Expert mode to enable an additional debug information, experimental tools and direct links to WebArchive saved snapshots.

- Tools for broken internal images and links can now return a list of all missing urls instead of deleting.

- Import tool shows corrupted/incomplete zip files that can be removed.

- Improved cookie support to catch up with requirements of modern browsers.

- A setting to select default editor to HTML pages (visual editor or code).

- Changes tab showing text differences is off by default, can be turned on in settings.

- You can roll back to a specific change in the Changes tab.

- Fixed XML sitemap url for websites that are built with a www subdomain.

- Fixed removal temporary files that were created during an installation/import process.

- Faster history purge.

- Femoved unused localization phrases.

- Language switch on the login screen.

- Updated external packages to their most recent versions.

- Optimized memory usage for calculating text differences in the Changes tab.

- Improved support for old versions of php-dom extension.

- An experimental tool to fix file sizes in the database in case you edited files directly on a server.

- An experimental and very raw flat-structure export tool.

- An experimental public key support for the future API features.

- Fixed: History section did not work when there was no zip extension enabled in php.

- New History tab with details of changes when editing text files.

- .htaccess edit tool.

- Ability to clean up backups to the desired rollback point.

- "Missing URLs" section removed from Tools as it is accessible from the dashboard.

- Monitoring and showing free disk space in the dashboard.

- Improved check of the required PHP extensions on startup and initial installation.

- Minor cosmetic changes.

- All external tools updated to latest versions.

- Separate password for safe mode.

- Extended safe mode. Now you can create custom rules and files, but without executable code.

- Reinstalling the site from the CMS without having to manually delete anything from the server.

- Ability to sort custom rules.

- Improved Search & Replace for very large sites.

- Additional settings for the "Viewport meta tag" tool.

- Support for IDN domains on hosting with the old version of ICU.

- In the initial installation with a password, the ability to log out is added.

- If .htaccess is detected during integration with WP, then the Archivarix rules will be added to its beginning.

- When downloading sites by serial number, CDN is used to increase speed.

- Other minor improvements and fixes.

- New dashboard for viewing statistics, server settings and system updates.

- Ability to create templates and conveniently add new pages to the site.

- Integration with Wordpress and Joomla in one click.

- Now in Search & Replace, additional filtering is done in the form of a constructor, where you can add any number of rules.

- Now you can filter the results by domain/subdomains, date-time, file size.

- A new tool to reset the cache in Cloudlfare or enable / disable Dev Mode.

- A new tool for removing versioning in urls, for example, "?ver=1.2.3" in css or js. Allows you to repair even those pages that looked crooked in the WebArchive due to the lack of styles with different versions.

- The robots.txt tool has the ability to immediately enable and add a Sitemap map.

- Automatic and manual creation of rollback points for changes.

- Import can import templates.

- Saving/Importing settings of the loader contains the created custom files.

- For all actions that can last longer than a timeout, a progress bar is displayed.

- A tool to add a viewport meta tag to all pages of a site.

- Tools for removing broken links and images have the ability to account for files on the server.

- A new tool to fix incorrect urlencode links in html code. Rarely, but may come in handy.

- Improved missing urls tool. Together with the new loader, now counts calls to non-existent URLs.

- Regex Tips in Search & Replace.

- Improved checking for missing php extensions.

- Updated all used js tools to the latest versions.

This and many other cosmetic improvements and speed optimizations.